Timely access to high-quality research unquestionably improves program operations and policy development. The policy and research communities must seize opportunities for research to be used on a broader scale—by putting the power of evidence directly in the hands of those who can put it into action to improve our society.

This is why I’m excited about the launch of RCT-YES, a software tool that Mathematica developed through a contract with the Institute of Education Sciences and is now making available for free to public program leaders and decision makers.

For 25 years, I have worked with my Mathematica colleagues, other researchers, and federal agencies to design and conduct rigorous large-scale evaluations of major education and employment programs, from Job Corps to Early Head Start to the Workforce Investment Act. What makes RCT-YES special is that it gives decision makers an unprecedented ability to conduct their own impact evaluations—without sacrificing rigor for speed—and to act on that evidence.

Expanding the Pool of Evaluators

RCT-YES is an important step toward making it easier to understand what works in public policy—a direction that the research and policy communities must continue to follow.

Policymakers’ awareness of the value of research evidence is rising. The Office of Management and Budget issued directives to heads of federal departments and agencies to develop research agendas to strengthen program performance using experimentation and innovation to test new approaches to program delivery. And Congress recently passed the bipartisan Evidence-Based Policy Commission Act to expand the use of research evidence in federal policymaking.

One way to strengthen policy research is to expand the pool of program evaluators beyond a small number of research firms and academics. For example, staff at state and local agencies as well as organizations receiving federal grants can help produce evaluation research. These individuals can play a unique role in generating evidence by assessing interventions to test, building “rapid-cycle” evaluations into day-to-day operations to test incremental program changes (as is often done in the private sector), and analyzing administrative data.

To date, a hurdle for local agencies and grantees has been lack of analytic skills, capacity, and time to conduct strong evaluations. Tools and technical assistance help fill these gaps.

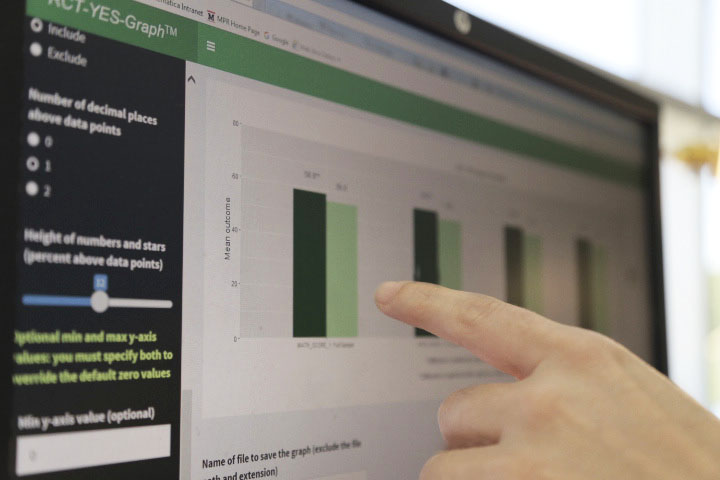

That is where RCT-YES fits in. This tool analyzes data and reports findings in a consistent way for a broad range of evaluation designs with treatment and control (comparison) groups. RCT-YES uses state-of-the-art statistical methods to estimate impacts once an evaluation has been implemented and the study data have been collected and cleaned.

Given our mission to improve public well-being, we have made the software available for free and designed it to be user friendly. It supports decision makers across the spectrum of vital public program areas, including education, health, employment, and child well-being.

RCT-YES is a significant advance, but it solves only one part of the puzzle. Moving forward, to help expand the pool of evaluators, the research community needs to develop a full suite of tools to empower practitioners and program operators to design and conduct rigorous impact evaluations. And the policy community must continue to invest in expanding the use of research evidence in developing and refining programs. Then we will be well on our way to solidifying a culture of evidence to better serve the public good.