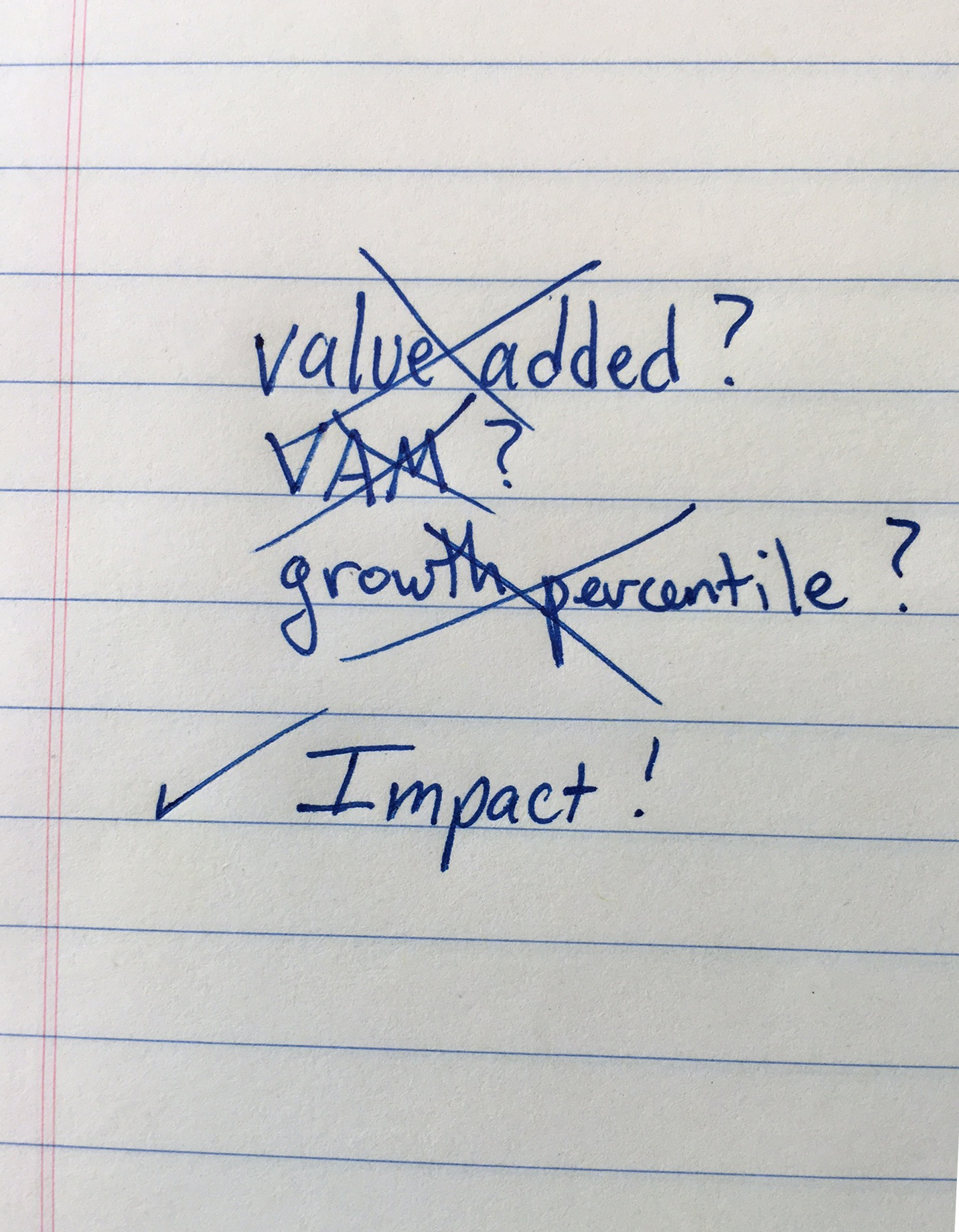

Indicators that gauge the impact of educators on test scores are often called "value-added" measures or VAMs. Although I have been using this term for the past 20 years in my own research, it's time to acknowledge how terrible it is and retire it, or at least phase it out.

The term comes from economics, where the value added is the unique contribution of an input to the value of an output in a production function, holding constant the effect of all other inputs. After a provocative 1981 article called, "Throwing Money at Schools," in which Eric Hanushek questioned the productivity of many education inputs, education production function analysis became popular. For researchers toiling in obscurity, the term “value added” was fine for this analysis, but when it hit mainstream some time in the 2000s, it started to feel a little wrong.

"Value added" is an unfortunate term. It sounds like we’ve measured the sum of all that teachers contribute or the sum of their value, which is dehumanizing as well as inaccurate. The real point is that we’ve estimated the teachers' impact on standardized test scores. It is just one aspect of a teacher's job, so it is not a synonym for teacher effectiveness. It is only as good as the tests we use to measure it, but it is still important. We want to know what impact educators do have on their students. We even care about the impact on test scores. That’s why it’s worth being very purposive about terminology, as in: “estimated impact on test scores.”

One word in that definition that is worth emphasizing is “estimated.” People usually skip over that, especially people who don’t love statistics, but it’s important. Estimate is a fancy way of saying “make our best guess.” Even though we know that these educated guesses can be off the mark, basic statistical principles can be used to quantify how far off the mark. Based on the best available research, the answer might be “not very far off.” Researchers have figured out clever ways to determine whether value-added measures get it right. They looked (here and here) at student performance before and after a high value-added teacher arrives. They have used randomized experiments (here and here) in which some students got a high value-added teacher and others got a lower value-added teacher and the only other difference between the groups of students was essentially whether their coin came up heads or tails. Therefore, the difference in the average outcomes for the groups of students can be attributed to the estimated impact, or value added, of the teacher. All of these studies show that having a high value-added teacher leads to higher student achievement later on (or better long-term outcomes).

With best-guess estimates, we also worry about reliability, which is statistics-speak for the frequency with which we would get the same answer if we did the measurement twice. Again, it’s possible to calculate reliability of educator impact measures and use it to characterize the margin of error, just as we do with public opinion polls. This is another reason we should not blithely ignore the “estimated” part of the definition.

The other linguistic norm I’d like to enforce is explicitly mentioning what the impact is on. If the outcome is test scores, then it's important to refer to the "impact on test scores," instead of on “student achievement” or “learning” or even “growth.” Simply calling it impact on test scores makes no value judgment about whether the tests are good or bad. The impact estimate is only as good as the student assessment being used.

Given all these limitations of estimates–concerns about systematic error, reliability, and validity of the tests-the hard part is using the measures wisely. How do we take these weaknesses into account and yet still extract meaning from the imperfect measures? This is where the field needs to go: creating decision models to put tools into the hands of the school leaders and other stakeholders so they can use the data wisely, reconcile them with non-test-score-based measures like classroom observation scores or student survey measures, and not overuse them, to make smart decisions.

Another phrase, "student growth modeling," has been adopted fairly widely but it has its own problems. Student growth models are models of how learning, measured by test scores, changes over time. The terminology blurs the line between students' contributions through their own effort and ability and teachers' contributions, which can differ based on how well the teacher can motivate, inspire, and explain to the student over a fixed period of time. If we are interested in just the teacher’s contribution, then the "student growth model" and its cousin, the "student growth percentile," are not useful constructs.

So, is the term “value added” nearing the end of its life span in education? This may be just a semantic issue, but it’s also about putting these educator impact estimates into perspective and avoiding the risk of false advertising. Building trust and understanding between data analysts and educators is the first step toward acting on data and improving education. Who will join this effort at accurate labeling? It can't happen overnight but we have to start somewhere.